It’s undeniable that generative-AI and LLMs have transformed how developers work. Hours of hunting Stack Overflow can be avoided by asking your AI-code assistant, multi-file context can be fed to the AI from inside your IDE, and applications can be built (see: generated) without the author even writing a line of code.

Much has been said over the last few years about how AI will replace developers, but we’ll leave that for another day. Instead, we’ll look at whether AI usefulness is reserved for experienced developers, or whether it can be used by people learning to code, to enhance and accelerate their learning without them picking up bad habits.

Understanding the Learning Process in Software Development

Before we look at how we use AI to enhance learning, it’s important to understand how people learn in a more general sense.

A few years ago I wrote about how important it is for developers to focus on how they learn, as much as what they learn, and that just building isn’t enough to ensure deep learning.

Instead, we need to first understand the process of learning, then we can get a better sense of how well we actually understand something, therefore learning in a more focused way.

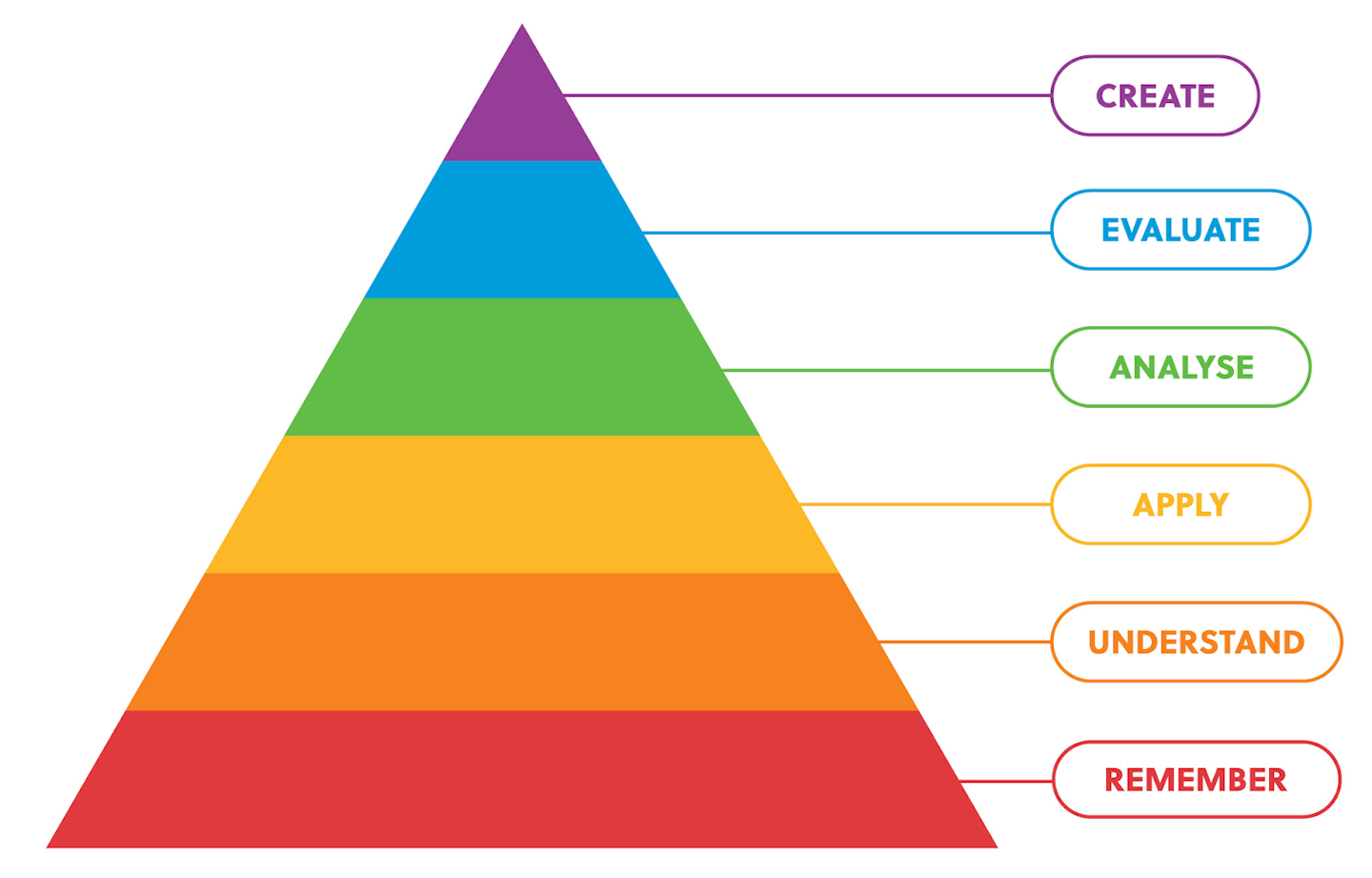

As the Blooms Taxonomy of learning (above) shows, there is a very clear process by which we learn about something, beginning with the ability to remember or recall information on it, through to the point where we have such a strong grasp on a subject that we can use the information (or knowledge) in new and novel situations, applying it in creative ways.

To understand this in a basic way, let’s consider the concept of API calls. At the beginning of your journey, they are entirely unfamiliar and you don’t know anything about them. Then, you learn about them, and could describe them to someone else, but wouldn’t know how to write one yourself.

Then you follow a tutorial, and learn a few different ways to make API calls. Over time you learn about the pros and cons of each approach, and use this information to make decisions. Finally, you find yourself building a new piece of software, and implement API calls based on your knowledge on the subject. At this point, you’ve reached the top of the pyramid, and can say that you have a deep understanding of a subject.

Learning how to build software is a messy process. There are thousands of concepts, almost infinite choice in terms of languages, libraries and frameworks, and it’s developing at a staggering rate compared to many other pursuits.

Using generative AI as a teacher might be a relatively new method, but the process of learning remains the same, regardless of the methods used.

Using AI as an On-Ramp

Often, when somebody wants to learn about a subject, they often gather information, study it, apply what they learn from it, and evaluate the results.

In a software-engineer context, developers find an issue, and often need to learn new information (or new techniques or skills) in order to try a potential solution. They might have some semblance of understanding on a subject, or have used some of the necessary tech in the past, but need to dig deeper.

AI really can shine in these situations. We can tell it what we know, describe the experience we have in the past, and then help guide it to make the process more efficient than it would be if we had to wade through information we already knew using more manual methods.

Perhaps you understand a subject pretty well, but want some ideas for how to use it in new and novel contexts? AI is a great sounding-board where you can consider ideas that are relevant to what you already know, and are specifically suited to where you are in your learning journey.

Accuracy and Relevancy

It goes without saying, at this stage, that while AI tools are powerful, they are not without their issues.

Much has been written about the accuracy of information created by generative-AI, and this is certainly one of the biggest issues facing developers using it to learn how to code.

Many of you (or at least those using AI while coding) will have experienced the frustration you feel when the AI chatbot is hallucinating, feeding you code that is fundamentally flawed or broken over and over, even after being given hints as to how to solve the issues.

It’s incorrect, and it’s confidently incorrect. That’s a dangerous duo.

It’s even more dangerous when you consider the fact that the person using it - in this instance - is inexperienced and lacks knowledge. So while the AI is incorrect, the reader doesn’t know it.

Before you know it, they’re used the (incorrect) information, and learnt a new (bad) habit as a result.

So how do you mitigate it? Well, first of all, you can request that the LLM keeps track of, and shares all of its sources. Secondly, you need to check these sources are (a) real (b) accurately used in the response and (c) trustworthy.

In an academic setting, if writing on a subject is to be believed, then the sources of that information must be included. Why would we hold an LLM to a different - or lower - standard?

While this might seem like a time-consuming way to learn, it’s still far more efficient that it would be learning alone, or without AI by your side to guide you. You just need to meet it half-way.

Avoiding Outdated Information

We live in an era where information is abundant and easily accessible. But how do you determine what’s relevant or outdated? AI models are nearly always several months behind the ‘live’ web (i.e. pages we can find through search) because they only scrape and index the web every few months.

This means that in between these indexing sessions, information is only as accurate as it was when it was scraped.

Information being six months out of date might not be an issue in many contexts, but six months is a long time in the world of development. In that time, a new version of the API you’re using could have been released, or seen major changes in how it works, or any other changes that could have been made. If you don’t know about these changes, you’ll end up with broken or poor quality code, and not know why.

So again, meet it half-way and combine AI with traditional search to ensure you’re finding the latest - and most accurate - information that’s out there.

Conclusion

AI’s uses are immense, for both seniors and juniors (or coding newbies) alike. Like many powerful tools, they must be used carefully and responsibly. Generative AI is no different, because if used effectively it can supercharge your ability to learn to code, and can derail and misdirect you on your journey if you use them naively.

We hope this article has helped you to reflect on how you AI to continue learning to code.

If you would like to hear more on this subject we recommend you check out Du'An S. Lightfoot’s session from the World Congress 2024 below, and as always let us know what you think on socials.