Last week (Dec 7th) Google held a virtual event where they presented a series of demos for their newest AI model, Gemini. Gemini is Google’s competitive response to ChatGPT. And although Google did release Bard in March, it felt like a rushed response to GPT-4, and was not embraced by the public in the same way GPT was.

The excitement around Gemini hasn’t necessary been positive or even product focused. We are going to discuss this further in terms of the controversy, but focusing back on the actual product, there was some really cool stuff which got overlooked. In this article, we are going to discuss the demo and explain the backstory, and then we are going to discuss Gemini’s features and how they differ from GPT — which you probably haven’t heard much about.

DeepMind Gemini demo

Below is the demo that caused a bunch of negative press for Google — on social media and from news outlets. It’s worth skipping through this video so that you can get the context for the criticisms which we’re going to discuss.

If you don’t know, this demo was one of many demos presented at Google’s virtual event. The reason people are focusing on this video specifically is because it “demonstrates” the most impressive capabilities of the new multi-modal AI. Speech to text, speech response, video and image recognition, complex comprehension, and simplified prompting.

What the Verge, Bloomberg, CNBC, BBC (and many others) reported, was that in the demo posted above the prompting was either hidden in the video or heavily paraphrased to streamline the interaction between AI and user.

Based on Google’s response and blog post this has been confirmed to be true. But what exactly was misleading and to what extent?

There are a couple of examples in the video that could be considered misleading. One example was when the presenter asked Gemini to create a game based on the world map [02:05]. The prompt in the video was the following: “based on what you see [a world map] come up with a game idea…” but from the developer docs we can see the actual prompt was a little different: “Let's play a game. Think of a country and give me a clue. The clue must be specific enough that there is only one correct country. I will try pointing at the country on a map.”

So there was additional information and direction given to the AI. There is quite a difference between a prompting with a simple question vs. a block of text. The criticism seems to be valid because this does give the impression that Gemini has higher levels of human comprehension and initiative compared with GPT which needs more structure and direction.

To be fair, Google didn’t hide the fact that prompts were different or that the video was edited. In the YouTube video description we find this disclaimer, “For the purposes of this demo, latency has been reduced, and Gemini outputs have been shortened for brevity.” And they told CNBC that the video was “an illustrative depiction.” From Google’s perspective, they were simply trying to convey what can be done with Gemini — not really focused on the how.

I’m hesitant to be too critical on this demo, because I imagine this type of “editing” happens a lot in the industry and there is some logic behind it. What I am more interested in is the technical data comparing GPT and Gemini presented by Google.

Gemini vs ChatGPT

Keep in mind that the data we are about to discuss is from Google. They have conducted their own research and tests and concluded that Gemini outperformed ChatGPT in almost all areas. Apply a healthy amount of scepticism here.

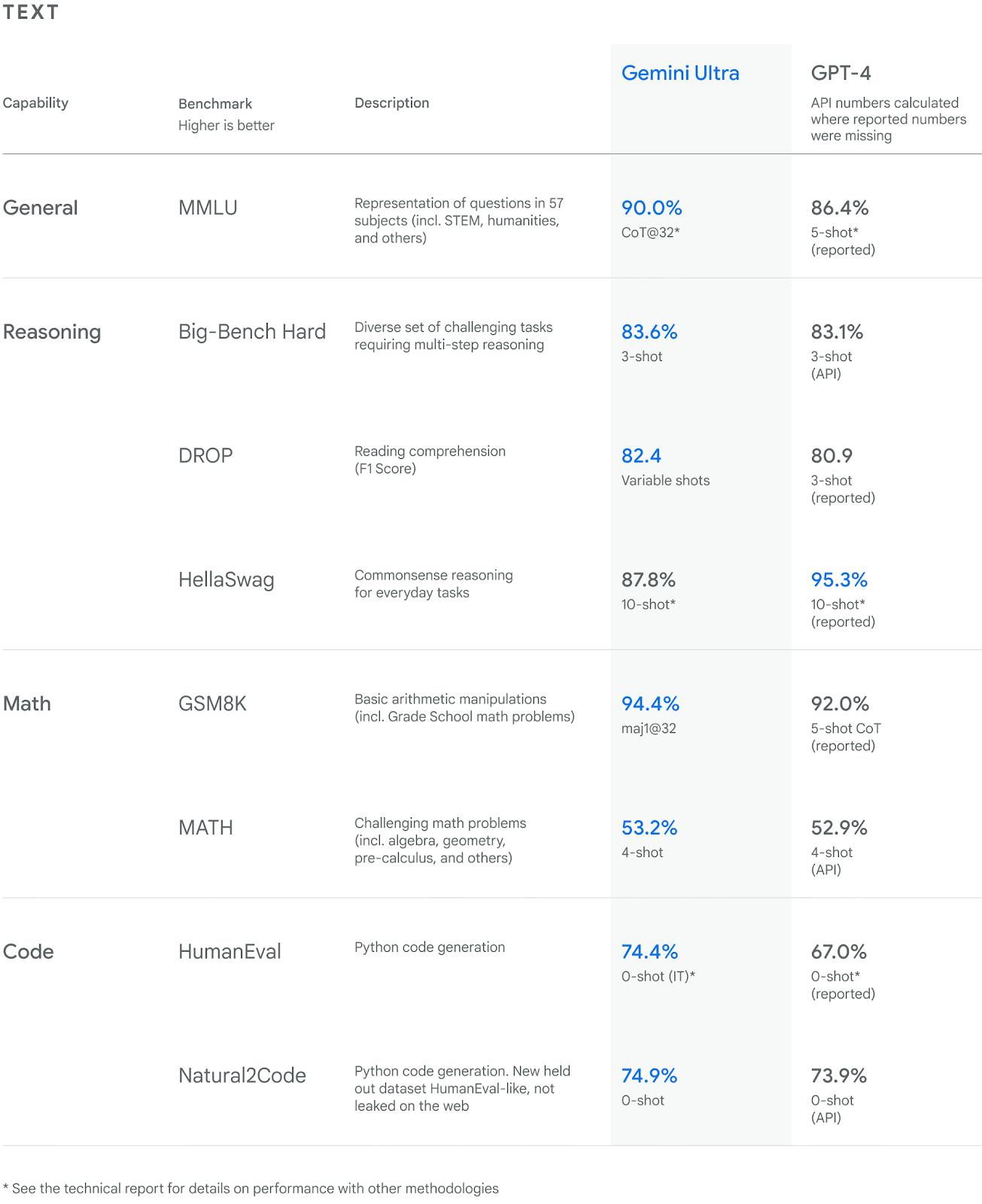

Here is the text comprehension comparison between Gemini Ultra and GPT-4.

According to Google, Gemini scored higher in every category except HellaSwag (common sense reasoning for everyday tasks). The most notable difference was python generation code, where Gemini scored 74.4% and GPT 67% (7 points higher). The data is suggesting Gemini is better at coding, which, if true, is going to be great for developers. Outside of coding, there was only a 1-2 point difference between the two models in most of these categories. Is a 2% difference even going to be noticeable for the user?

Under each percentage score, we can see the tests that were initiated by the researchers. I don’t understand each of these fully, but you can clearly see that different tests were applied, which implies the baseline wasn’t the same for GPT and Gemini. Fireship does a good breakdown of these tests.

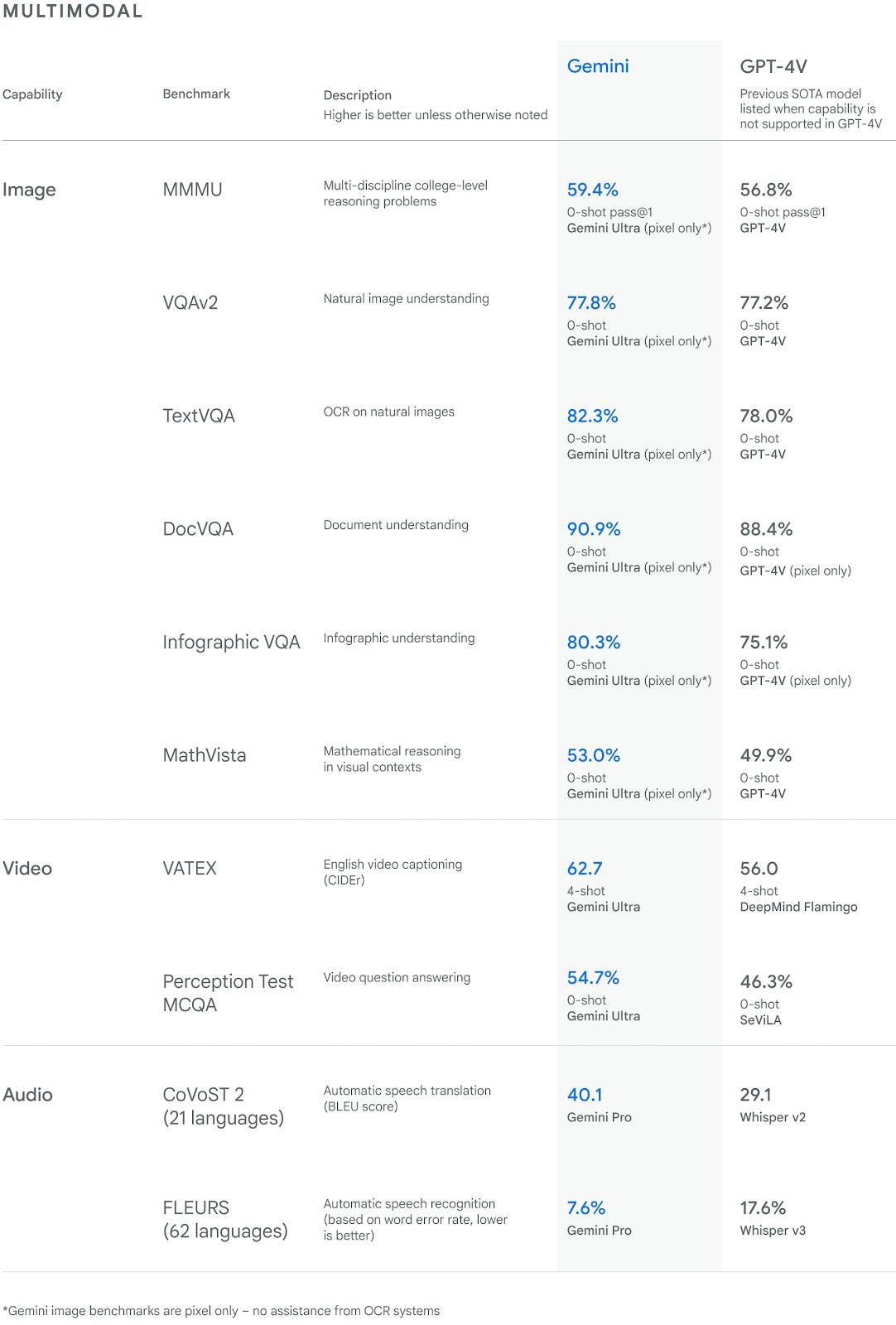

Up next, we have the multimodal comparison (image and video):

Again, Gemini seems to be more advanced on the multimodal front. Gemini is notably better at automatic speech translation, automatic speech recognition, and English video captioning. This seems likely based on Google and their range of industry leading products. The multi-model comparison seems to be the biggest benefit to using Gemini — especially those users interested in video and audio.

I have a feeling that this data is going to be outdated in less than three months. OpenAI is on the verge of another update or release, whatever it is it’s going to be big. The data differences here are not big enough to move users, especially when an update hits.

Gemini does have some cool features

We’ve spent a lot of time critiquing Google and their methods, but looking past the video editing and the data comparison, there’s a lot to be excited about. Let’s go through a few features that we haven’t seen before.

1. User experience and interactive features: The interactive features are quite cool — of course they are not entirely necessary, but they do add to the experience and streamline the back and forth between user an AI. For example, in the screenshot below, Gemini has indicated on a maths test (image) which questions were answered correctly and incorrectly.

Going one step further, you could click on a specific box to get further information. Gemini then identifies in the handwriting where the person went wrong.

2. Education and learning: With the new image interactive features, you can see how beneficial Gemini will be for learning and teaching. In the screenshot below, Gemini is teaching a concept and then creating a sample question to test the user.

Not only can you now check your work, but you can also ask this AI to be your personal tutor. Of course, GPT can do the same thing, but we haven’t seen these interactive features which make the process a lot smoother. It’s going to be interesting to see what can be done with language learning — since Google already has a lot of infrastructure here.

3. Data extraction: One of the demos showed how Gemini could be used in academia and for scientific research. They fed Gemini two hundred thousand papers and asked it to identify relevance based on a query. Gemini found the relevant papers along with annotations (source material to justify findings). You can probably imagine how useful this tech is going to be when trained on company data.

Gemini release data

When will we be able to get our hands on Gemini? Apparently, you can access a lite version of Gemini (called Gemini Pro) using Bard. This is supposed to be available on December 13th. But the Ultra version (which is the multi-modal version) won’t be available until early 2024. Keep an eye out for Google’s next announcement.