Denis Washington & Olli Salonen

Kafka Streams Microservices

#1about 3 minutes

Core concepts of Kafka and Kafka Streams

Kafka is a distributed event streaming platform using topics, partitions, producers, and consumers for scalable data processing.

#2about 6 minutes

Evolving from classic microservices to event-driven design

The architecture evolved from traditional request-response microservices to an event-driven model using Kafka as the single source of truth to improve decoupling and extensibility.

#3about 3 minutes

Understanding the system topology and failure scenarios

The system uses an API service with materialized views for robust reads and command processing topologies that can recover from failures by replaying input topics.

#4about 4 minutes

Building a searchable product catalog pipeline

A data pipeline cleans, deduplicates, and amends product data from various sources, then streams it to Elasticsearch to create a searchable materialized view.

#5about 2 minutes

Implementing inventory management using a CQRS pattern

A command processing pipeline implements the CQRS pattern by separating write operations from read models, using an event topic as the source of truth for inventory data.

#6about 7 minutes

Solving uniqueness constraints and race conditions

Race conditions caused by eventual consistency are solved by using manually updated state stores and repartitioning command streams to ensure data locality for validation.

#7about 3 minutes

Opportunistic data consumption for new features

New features like automatic warranty extensions can be added by deploying new services that consume existing data streams without modifying the original producers.

#8about 5 minutes

Key challenges and lessons from a pure Kafka approach

A pure Kafka Streams architecture presents challenges in development complexity, stateful operations, careful configuration for transactions, and operational tooling.

#9about 12 minutes

Evolving the architecture with a hybrid database approach

The architecture can be evolved by integrating traditional databases to simplify complex stateful logic, while using connectors to publish all state changes back to Kafka.

Related jobs

Jobs that call for the skills explored in this talk.

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

03:41 MIN

Decoupling microservices with event streams

From event streaming to event sourcing 101

01:24 MIN

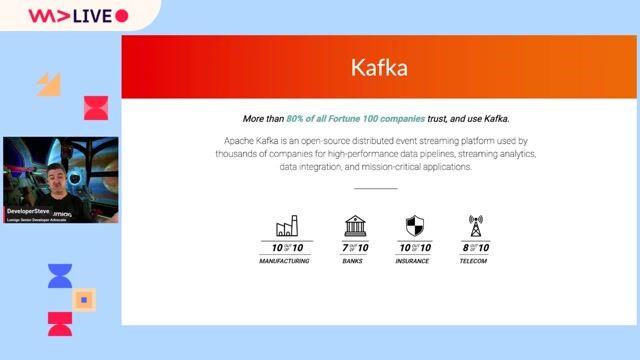

Understanding Kafka's role in modern architectures

Tips, Techniques, and Common Pitfalls Debugging Kafka

04:23 MIN

A traditional approach to streaming with Kafka and Debezium

Python-Based Data Streaming Pipelines Within Minutes

04:50 MIN

Implementing a CQRS banking demo with Kafka

From event streaming to event sourcing 101

04:11 MIN

Analyzing a complex Kafka architecture at Netflix

Tips, Techniques, and Common Pitfalls Debugging Kafka

01:17 MIN

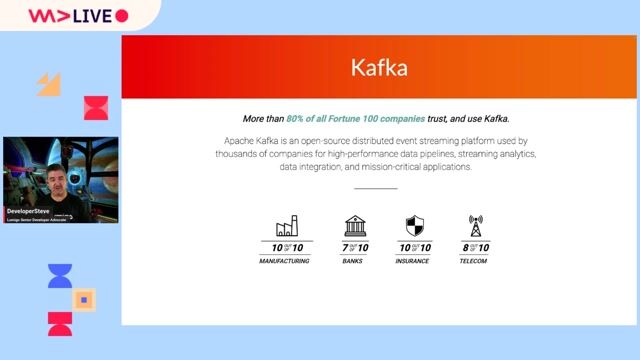

Recapping Kafka's capabilities for real-time data feeds

Let's Get Started With Apache Kafka® for Python Developers

07:20 MIN

Tracing the evolution of microservice communication patterns

Rethinking Reactive Architectures with GraphQL

02:57 MIN

Understanding the purpose and core use cases of Kafka

Let's Get Started With Apache Kafka® for Python Developers

Featured Partners

Related Videos

30:51

30:51Why and when should we consider Stream Processing frameworks in our solutions

Soroosh Khodami

46:24

46:24The Rise of Reactive Microservices

David Leitner

38:50

38:50Let's Get Started With Apache Kafka® for Python Developers

Lucia Cerchie

54:29

54:29Tips, Techniques, and Common Pitfalls Debugging Kafka

DeveloperSteve

45:36

45:36What is a Message Queue and when and why would I use it?

Clemens Vasters

28:12

28:12Scaling: from 0 to 20 million users

Josip Stuhli

20:28

20:28Introducing a Digital Service Catalog for speed and scale

Bastian Heilemann & Akash Manjunath

44:37

44:37Advanced Caching Patterns used by 2000 microservices

Natan Silnitsky

Related Articles

View all articles.gif?w=240&auto=compress,format)

From learning to earning

Jobs that call for the skills explored in this talk.

Confideck GmbH

Vienna, Austria

Remote

Intermediate

Senior

Node.js

MongoDB

TypeScript

consider it GmbH

Hamburg, Germany

Rust

Azure

Linux

DevOps

Python

+5

Teads

Paris, France

Remote

Azure

Kafka

Terraform

TypeScript

+2

Rigobeert Cremers

Ghent, Belgium

Intermediate

API

Java

Azure

Kafka

Docker

+5

Teads

Paris, France

Remote

Senior

Azure

Kafka

Terraform

TypeScript

+2

Teads

Canton of Montpellier-3, France

Remote

Azure

Kafka

Terraform

TypeScript

+2

Michael Page International (Deutschland) GmbH

Frankfurt am Main, Germany

Azure

Terraform

Kubernetes