Daniel Madalitso Phiri

Vision for Websites: Training Your Frontend to See

#1about 1 minute

Defining vision as the ability to deduce and understand

The concept of vision for websites is redefined from simply seeing to the ability to deduce, understand, and act on information.

#2about 4 minutes

Demo of a multimodal e-commerce search application

A live demonstration showcases an e-commerce store where users can search for products using both text queries and by uploading images.

#3about 2 minutes

What is multimodality in artificial intelligence?

Multimodality enables search queries to use multiple media types like text, images, and audio to capture more context and improve user interaction.

#4about 2 minutes

Why multimodal AI creates richer user experiences

Multimodal interfaces provide more natural and context-aware interactions, moving beyond simple keyword searches to a more intuitive experience.

#5about 4 minutes

Differentiating generative AI from embedding models

Embedding models encapsulate information into numerical representations (vectors), unlike generative models which create new data.

#6about 4 minutes

How vector search works by measuring distance

Vector search operates by converting a query into an embedding and finding the closest, most semantically similar items in a multidimensional space.

#7about 2 minutes

Creating a unified space for multimodal search

Different data types like text, images, and audio are processed by specific encoders and plotted into a single, unified vector space for cross-modal queries.

#8about 9 minutes

Implementing text-based image search with Weaviate

A code walkthrough demonstrates how to build a text-to-image search feature using a Next.js frontend and a Weaviate backend with a `nearText` query.

#9about 4 minutes

Implementing visual search with an image query

The code for an image-to-image search is explained, showing how a base64 image is sent to the backend to perform a `nearImage` vector search.

#10about 2 minutes

Expanding vision to other creative applications

Beyond e-commerce, multimodal vision can be applied to creative use cases like movie recommenders, educational tools, and map navigation.

Related jobs

Jobs that call for the skills explored in this talk.

Technoly GmbH

Berlin, Germany

Senior

JavaScript

Angular

+1

Hubert Burda Media

München, Germany

€80-95K

Intermediate

Senior

JavaScript

Node.js

+1

Matching moments

11:20 MIN

A tour of creative code demos and useful developer tools

WeAreDevelopers LIVE – PHP Is Alive and Kicking and More

08:03 MIN

Exploring modern tools for web interaction and analysis

WeAreDevelopers LIVE - the weekly developer show with Chris Heilmann and Daniel Cranney

01:57 MIN

Presenting live web scraping demos at a developer conference

Tech with Tim at WeAreDevelopers World Congress 2024

01:14 MIN

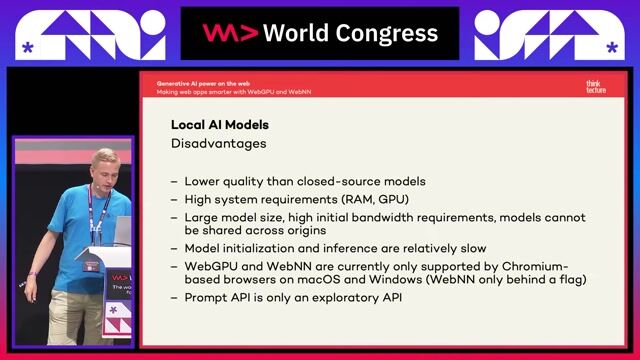

The future of on-device AI in web development

Generative AI power on the web: making web apps smarter with WebGPU and WebNN

00:52 MIN

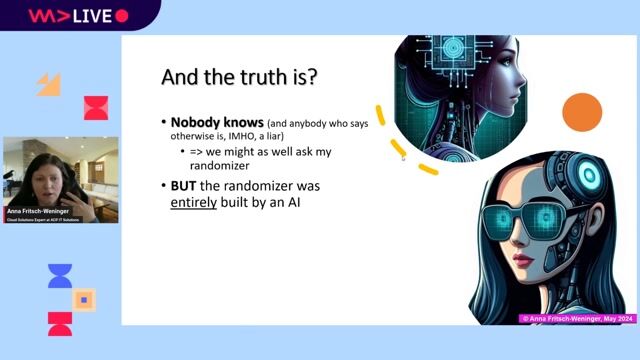

Will AI replace developers? An AI-built demo

From Syntax to Singularity: AI’s Impact on Developer Roles

01:57 MIN

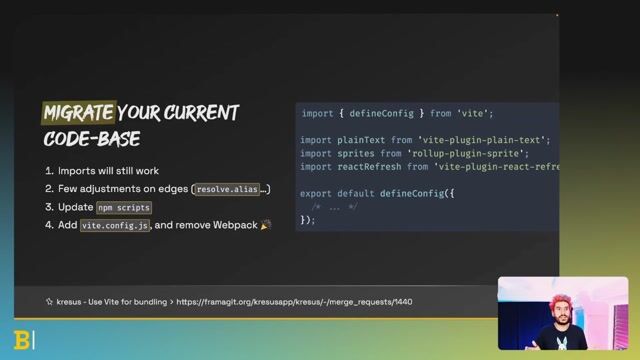

The future of web development is faster and simpler

The Eternal Sunshine of the Zero Build Pipeline

10:29 MIN

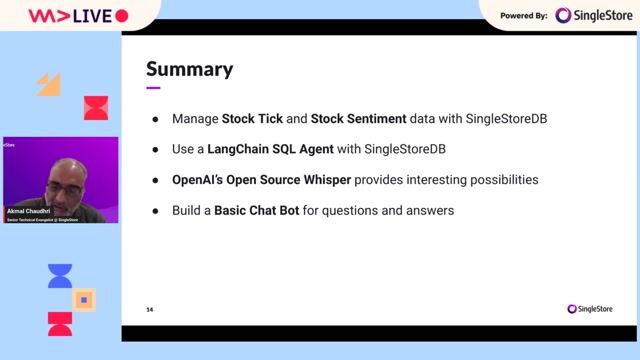

Exploring the future of AI in FinTech

OpenAI for FinTech: Building a Stock Market Advisor Chatbot

02:46 MIN

A demo of client-side AI using the NPU

Privacy-first in-browser Generative AI web apps: offline-ready, future-proof, standards-based

Featured Partners

Related Videos

1:12:04

1:12:04WeAreDevelopers LIVE - the weekly developer show with Chris Heilmann and Daniel Cranney

21:35

21:35Build UIs that learn - Discover the powerful combination of UI and AI

Eliran Natan

1:03:08

1:03:08WAD Live 22/01/2025: Exploring AI, Web Development, and Accessibility in Tech with Stefan Judis

43:53

43:53Web APIs you might not know about

Sasha Shynkevich

41:40

41:40Virtual Reality – The path to create your world

Drishti Jain

41:43

41:43Modern Web Development with Nuxt3

Alexander Lichter

44:43

44:43Explore new web features before everyone else

Nikita Dubko

53:46

53:46WeAreDevelopers LIVE – AI vs the Web & AI in Browsers

Chris Heilmann, Daniel Cranney & Raymond Camden

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Visonum GmbH

Remote

Junior

Intermediate

React

Redux

TypeScript

NeuralAI

Remote

€40-80K

API

C++

WebGL

+7

NeuralAI

Remote

€4-8K

Senior

API

C++

WebGL

+7

NeuralAI

Remote

€50-100K

Senior

API

C++

WebGL

+7

Optimus Search

Berlin, Germany

Remote

Intermediate

API

CSS

GIT

React

+4