Lee Boonstra

Raise your voice!

#1about 1 minute

Building a custom voice AI with WebRTC and Google APIs

An overview of the architecture for streaming voice from a browser to a backend for processing with conversational AI.

#2about 4 minutes

Comparing custom voice AI to public assistants

A custom voice AI provides more control over technical requirements and terms of service compared to public platforms like Google Assistant or Alexa.

#3about 1 minute

Handling short versus long user utterances

Public assistants are optimized for short commands, whereas custom AI for use cases like contact centers must be designed to handle long, complex user stories.

#4about 3 minutes

Demo of a voice-enabled self-service kiosk

A demonstration of a web-based airport kiosk that answers user questions spoken in different languages using a custom voice AI.

#5about 1 minute

The core challenge of integrating voice technologies

The main difficulty in building a voice AI is not using individual APIs, but integrating the entire pipeline from frontend audio stream to backend processing.

#6about 3 minutes

Capturing cross-browser microphone audio with RecordRTC

The RecordRTC library is used to abstract away browser inconsistencies and reliably capture microphone audio streams for processing.

#7about 2 minutes

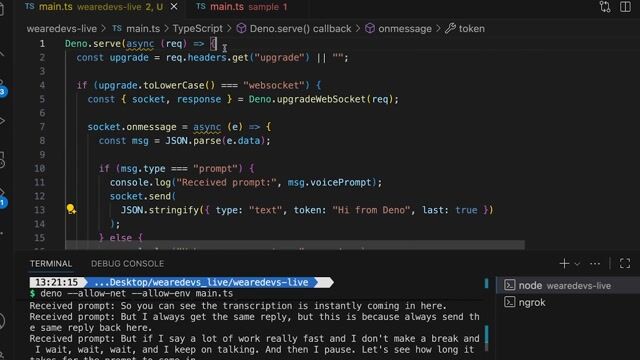

Streaming audio to the backend with Socket.IO

Socket.IO and the socket.io-stream module enable real-time, bidirectional streaming of binary audio data from the browser to a Node.js backend.

#8about 3 minutes

Transcribing audio with the Speech-to-Text API

Google's Speech-to-Text API converts the incoming audio stream into text using a streaming recognition call that handles data as it arrives.

#9about 4 minutes

Understanding user intent with Dialogflow

Dialogflow uses natural language understanding to match transcribed user text to predefined intents, entities, and knowledge bases to determine the user's goal.

#10about 4 minutes

Adding multi-language support with the Translate API

The Translate API enables multi-language support by translating foreign language input to English for Dialogflow processing and then translating the response back.

#11about 3 minutes

Generating audio responses with Text-to-Speech

The Text-to-Speech API synthesizes a natural-sounding voice from the text response, which is then sent back to the browser as an audio buffer to be played.

#12about 1 minute

Deployment considerations and open source code

Deploying a voice application requires HTTPS for microphone access, which can be easily configured using services like App Engine Flex, and the full project code is available on GitHub.

Related jobs

Jobs that call for the skills explored in this talk.

ROSEN Technology and Research Center GmbH

Osnabrück, Germany

Senior

TypeScript

React

+3

Wilken GmbH

Ulm, Germany

Senior

Amazon Web Services (AWS)

Kubernetes

+1

Matching moments

09:56 MIN

Adding conversational intelligence with OpenAI and streaming

WeAreDevelopers LIVE – Real-Time Phone Agents, Unsafe VPNs & More

02:20 MIN

Using AI for a conversational developer experience

Platform Engineering untold truths: is just an infrastructure matter?

03:23 MIN

Building real-time conversational agents

Prompt API & WebNN: The AI Revolution Right in Your Browser

03:56 MIN

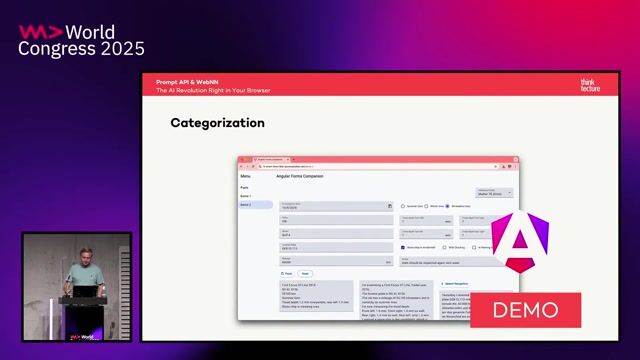

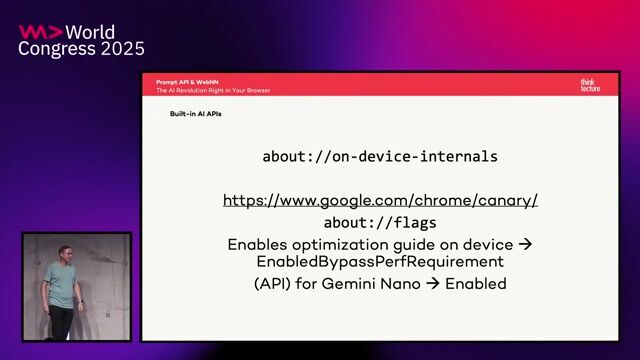

Practical use cases for on-device AI

Prompt API & WebNN: The AI Revolution Right in Your Browser

04:16 MIN

Democratizing software development with natural language

Agents for the Sake of Happiness

01:26 MIN

Live demo of the AI-powered translation application

Rust and Docker: Let's build an AI-powered app!

02:54 MIN

Revitalizing voice communication with AI for customer support

API‑First: How Twilio Designs for Developers - Justin Kitagawa (Twilio)

04:47 MIN

Why natural voice AI has been so difficult

Hello JARVIS - Building Voice Interfaces for Your LLMS

Featured Partners

Related Videos

55:27

55:27Creating bots with Dialogflow CX

Xavier Portilla Edo

53:46

53:46WeAreDevelopers LIVE – AI vs the Web & AI in Browsers

Chris Heilmann, Daniel Cranney & Raymond Camden

29:35

29:35Minimal infrastructure for Real‑Time Phone Agents: transcripts in, responses out

Chris Heilmann, Daniel Cranney, Marius Obert & Staff Developer Evangelist at Twilio

56:33

56:33OpenAI for FinTech: Building a Stock Market Advisor Chatbot

Akmal Chaudhri

1:16:15

1:16:15WeAreDevelopers LIVE – Real-Time Phone Agents, Unsafe VPNs & More

Chris Heilmann, Daniel Cranney & Marius Obert

58:06

58:06From Syntax to Singularity: AI’s Impact on Developer Roles

Anna Fritsch-Weninger

48:27

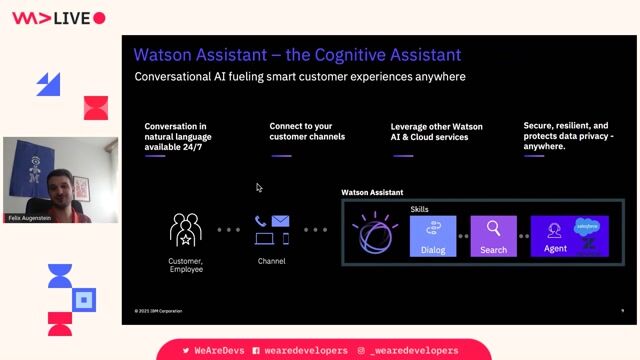

48:27Integrate your Cognitive Assistant with 3rd-party DBs and software

Felix Augenstein

27:23

27:23From ML to LLM: On-device AI in the Browser

Nico Martin

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

DeepL

Amsterdam, Netherlands

Remote

.NET

React

Kafka

Node.js

+3

MANGO

Palau-solità i Plegamans, Spain

API

Azure

Redis

Node.js

Salesforce

+6

CM.com N.V.

Breda, Netherlands

Senior

Azure

Python

Kubernetes

Microservices

Machine Learning

+2

DeepL

Amsterdam, Netherlands

Remote

Senior

API

React

.NET Core

DeepL

Charing Cross, United Kingdom

Remote

Senior

API

React

.NET Core