Karl Groves

Data Mining Accessibility

#1about 4 minutes

How large-scale data was collected for accessibility research

The research methodology involved analyzing 14.6 million errors from 6 million URLs to establish a statistically significant dataset.

#2about 8 minutes

Common accessibility issues found through automated testing

Automated analysis reveals that 90% of issues fall into five categories, with missing alt text and navigation problems being the most frequent.

#3about 9 minutes

Analyzing the results of manual accessibility audits

Manual audits on representative samples show that keyboard accessibility and color contrast are top issues, with nearly half being high-severity problems.

#4about 8 minutes

The strong correlation between automated and manual testing

Data shows a significant overlap between automated and manual test findings, with seven of the top ten failing success criteria being identical.

#5about 3 minutes

Why testing before deployment is twice as effective

Pages tested before deployment have less than half as many issues as those tested after, proving the ineffectiveness of a reactive audit-fix cycle.

#6about 8 minutes

Applying extreme programming principles to accessibility

Sustainable accessibility is achieved by integrating practices like early automation, specific acceptance tests, pair programming, and treating accessibility as a core quality problem.

#7about 17 minutes

Q&A on developer tooling and testing best practices

The discussion covers developer resistance to in-IDE linting, the causes of false positives in tools, and the need for a layered testing strategy.

Related jobs

Jobs that call for the skills explored in this talk.

Eltemate

Amsterdam, Netherlands

Intermediate

Senior

TypeScript

Continuous Integration

+1

Wilken GmbH

Ulm, Germany

Intermediate

Senior

JavaScript

Docker

+1

Matching moments

07:01 MIN

The importance of web accessibility as a core developer craft

WAD Live 22/01/2025: Exploring AI, Web Development, and Accessibility in Tech with Stefan Judis

01:44 MIN

Making accessibility a core part of your development process

Oh S***! There's a New Accessibility Law and I'm Not Ready!

02:09 MIN

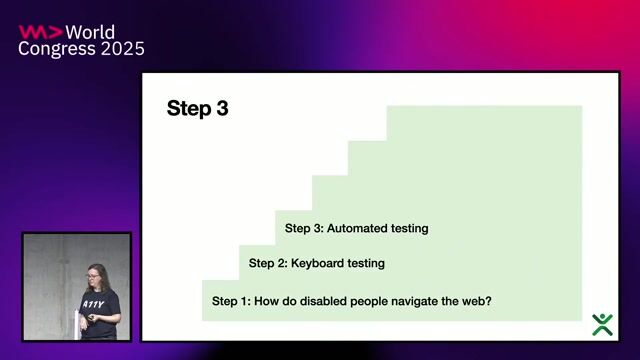

Using automated tools to find common accessibility issues

Oh S***! There's a New Accessibility Law and I'm Not Ready!

00:57 MIN

Adopting a user-centric accessibility mindset

Decoding web accessibility through audit

05:18 MIN

Integrating accessibility into core developer education

Fireside Chat: Can Regulation Improve Accessibility? - Léonie Watson

01:57 MIN

Why you should integrate accessibility early

Going on a CODE100 Accessibility Scavenger Hunt

01:22 MIN

Introducing the shift left approach to accessibility

Shift Left On Accessibility - Geri Reid

04:37 MIN

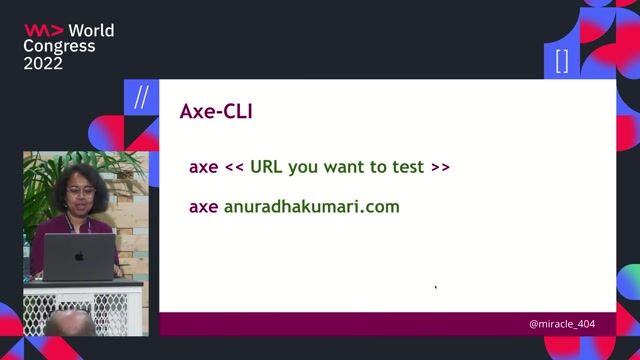

Using automated tools for accessibility testing

Going on a CODE100 Accessibility Scavenger Hunt

Featured Partners

Related Videos

56:01

56:01Preventing Accessibility Issues Instead Of Fixing Them

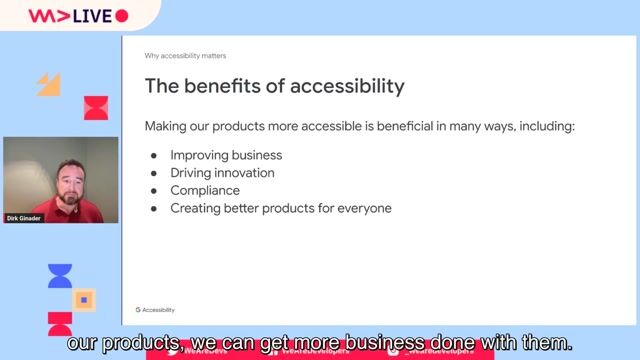

Dirk Ginader

31:30

31:30Accessibility powered by AI

Ramona Domen

32:00

32:00Is This App Accessible? A Live Testing Demo

Eeva-Jonna Panula

35:39

35:39Going on a CODE100 Accessibility Scavenger Hunt

Chris Heilmann & Daniel Cranney

30:07

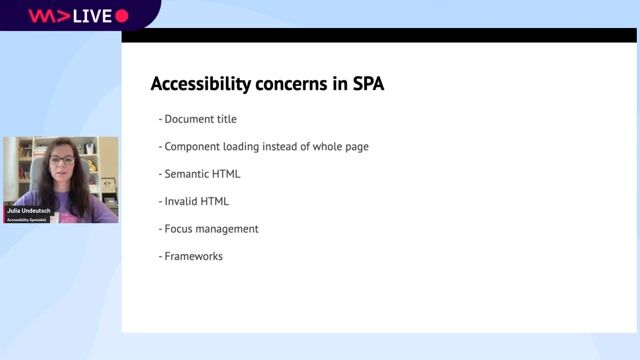

30:07Accessibility in React Application

Julia Undeutsch

27:16

27:16Mastering Keyboard Accessibility

Tanja Ulianova

55:32

55:32ARIA: the good parts

Hidde de Vries

29:59

29:59The What, Why, Who and How of accessibility on the web

Konstantin Tieber

Related Articles

View all articles

From learning to earning

Jobs that call for the skills explored in this talk.

Code Healers LLC

Hinesville, United States of America

Remote

€30-40K

Intermediate

Senior

.NET

React

JavaScript

+2

Datatech Analytics

Birmingham, United Kingdom

£50K

API

CSS

GIT

HTML

+4

Datatech Analytics

Newcastle upon Tyne, United Kingdom

£50K

API

CSS

GIT

HTML

+4